B.HARIHARAN, P. ARBELAEZ, R. GIRSHICK AND J. MALIK

CVPR, 2015. (ORAL)

Abstract

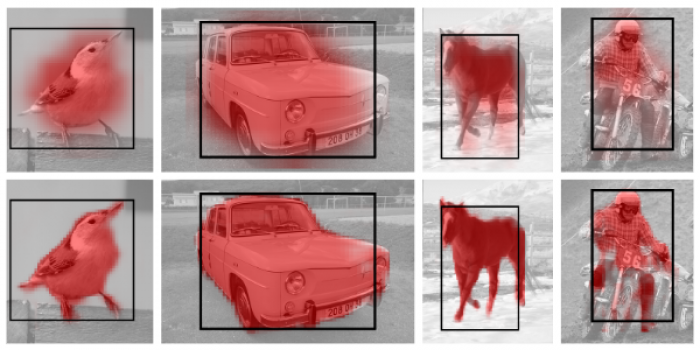

Recognition algorithms based on convolutional networks (CNNs) typically use the output of the last layer as a feature representation. However, the information in this layer may be too coarse spatially to allow precise localization. On the contrary, earlier layers may be precise in localization but will not capture semantics. To get the best of both worlds, we define the hypercolumn at a pixel as the vector of activations of all CNN units above that pixel. Using hypercolumns as pixel descriptors, we show results on three fine-grained localization tasks: simultaneous detection and segmentation [22], where we improve state-of-the-art from 49.7 mean AP r [22] to 60.0, keypoint localization, where we get a 3.3 point boost over [20], and part labeling, where we show a 6.6 point gain over a strong baseline.